Every second, billions of sensors quietly measure the pulse of the physical world. They track the beat of a human heart, the vibration of a turbine, the motion of atoms inside industrial machinery, the movement of people through buildings, and the flow of energy across cities.

These sensors generate an enormous stream of data, yet almost none of it is easily accessible or comprehensible to the people who rely on it. The result is significant productivity loss: experts spend up to 40% of their time simply wrestling data into a usable form and getting insight from it — a burden that has given rise to the term “data janitors.”

Today, a doctor cannot simply ask a heart monitor why a patient’s rhythm looks unusual—or what exactly is unusual about it. An industrial facilities manager can’t talk to a building to understand why power usage spiked. An engineer cannot just query a production line about unexpected downtime or ask it to generate a new control signal with specific properties.

But what if they could?

What if anyone could have a natural conversation with any sensor or machine—and the sensor could respond? Explain what it’s seeing, predict what might happen next, detect and localize anomalies, or even generate new signals to control a robot or machinery inside an intelligent factory.

That is the promise of Newton TimeFusion, Archetype’s new breakthrough 2B-parameter multimodal foundation model for the physical world and the newest member of the Newton model family. It represents a major step toward making real-world systems far more transparent, adaptive, and intelligent.

What Newton TimeFusion Can Do

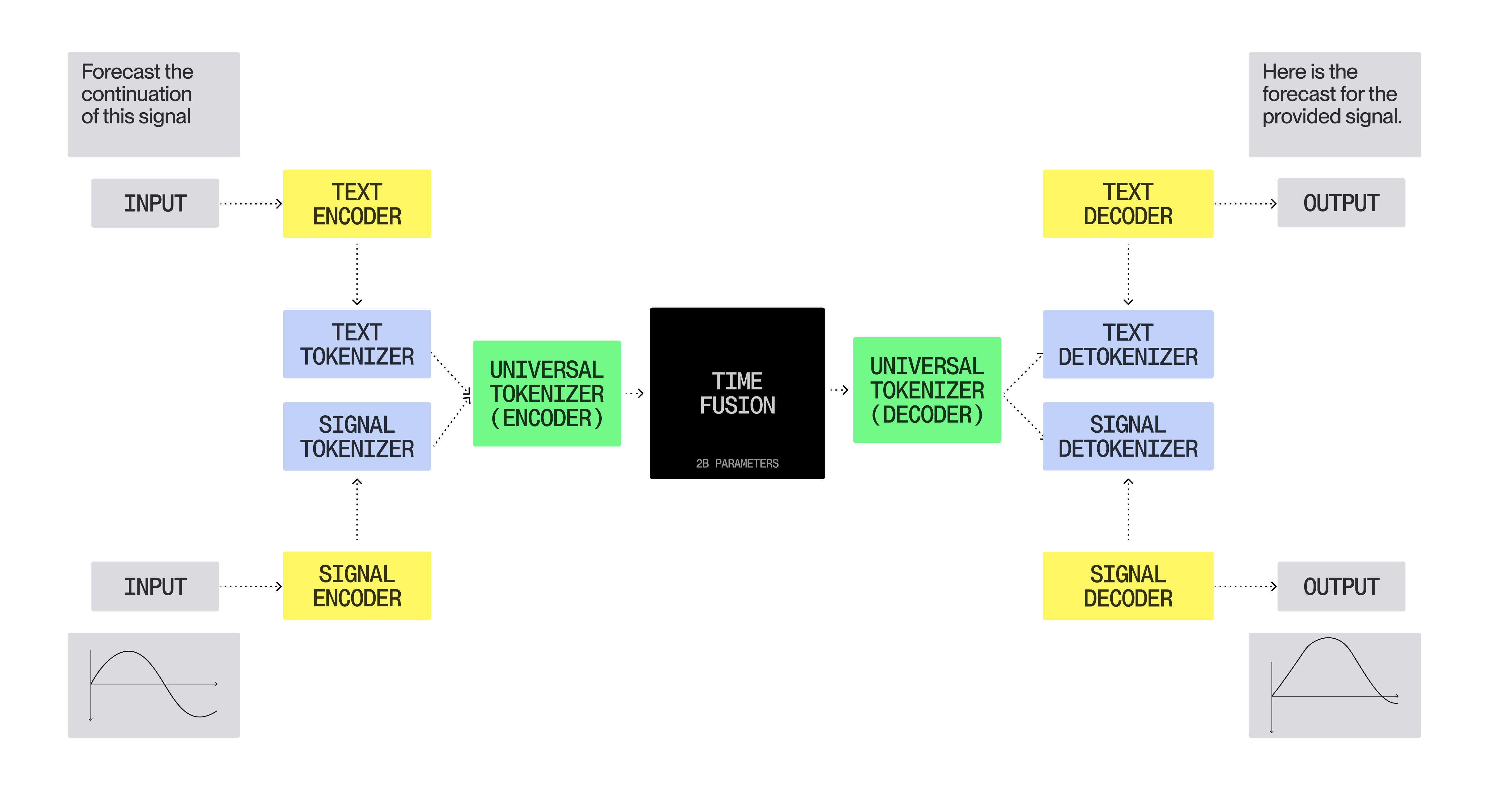

Newton TimeFusion is the first general sensor-language fusion model developed from the ground up by the team at Archetype AI — a 2-billion-parameter multimodal transformer that unifies human language and time-series sensor data into a single representational embedding space.

With it, you can describe a signal stream in plain English, ask questions about it, request transformations like filtering or forecasting, or even generate entirely new signals from natural language descriptions. This creates an intuitive interface to the physical world — one where you can talk to machines or physical processes the same way you would talk to a human operator. Capabilities include, but are not limited to:

- Describing any sensor signal in natural language, including identifying, detecting, and explaining anomalies.

- Transforming signals in a physically correct way — imputing missing data or applying operations such as filtering, smoothing, or forecasting.

- Generating synthetic sensor signals directly from a natural language prompt.

Let’s briefly review what it can do.

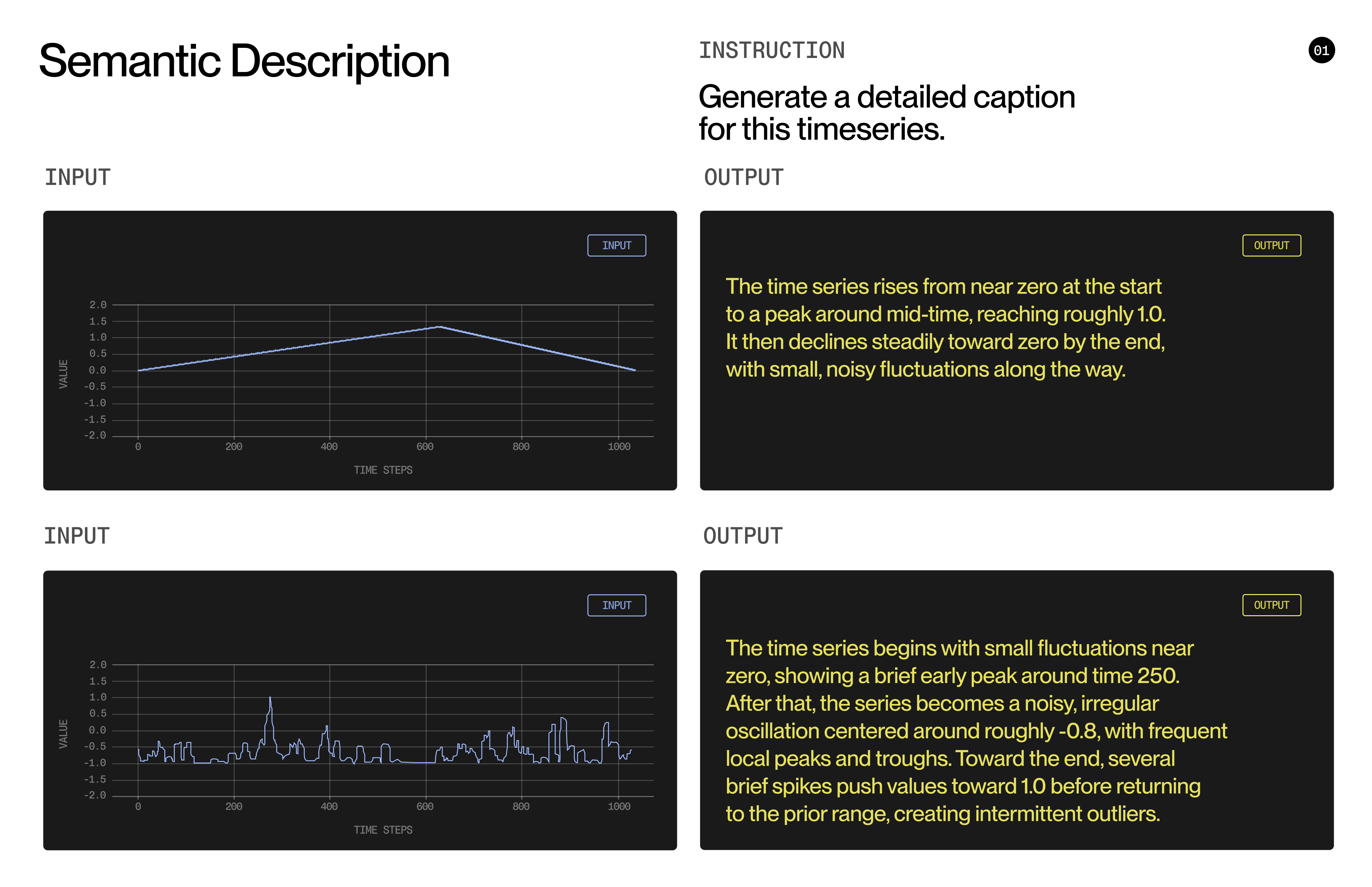

Signal-to-Text: Explaining What a Sensor Is Seeing

We begin with the most fundamental capability: TimeFusion can describe complex sensor patterns in natural language, making them interpretable to anyone — not just experts.

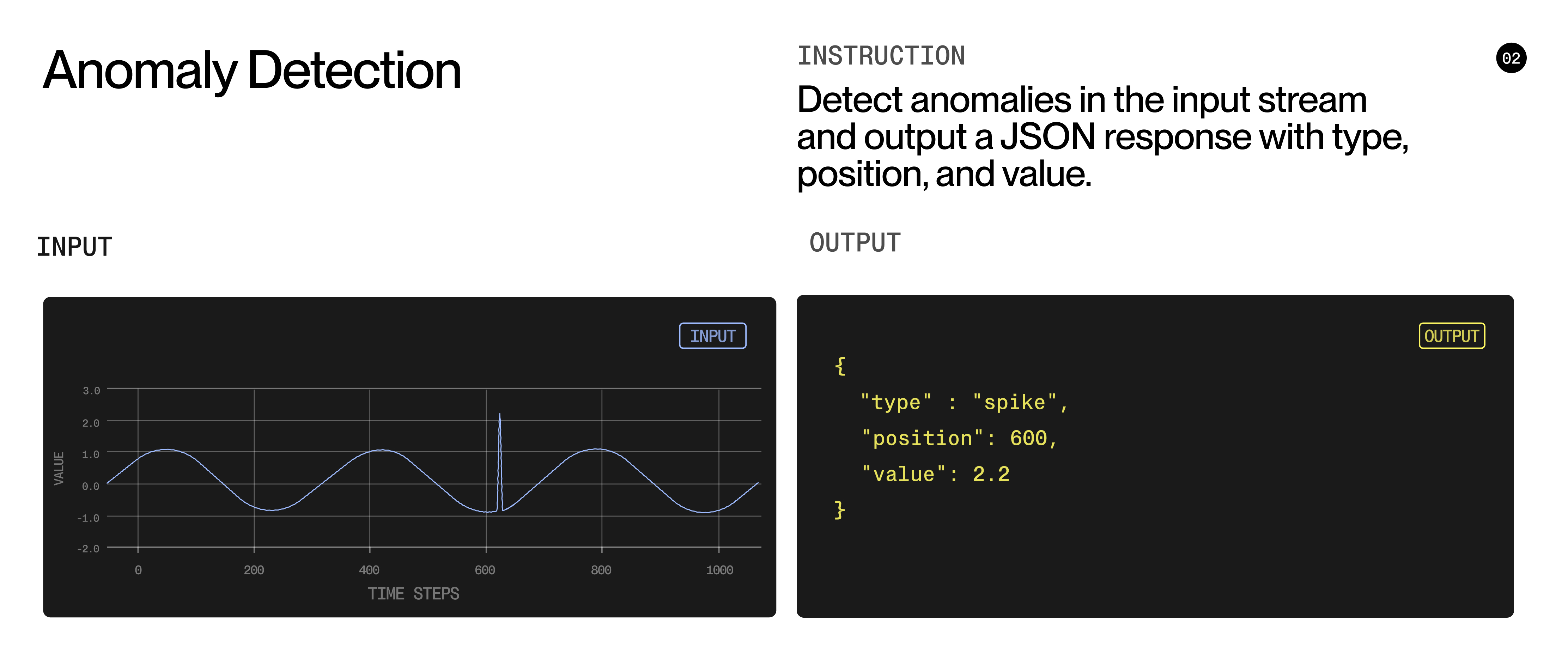

In many real-world use cases, a full description of a signal isn’t necessary. Instead, we often need highly targeted information — a specific insight, extracted on demand, in a form that is immediately useful. For example, we can ask TimeFusion to detect and quantify anomalies within a sensor stream. This shifts the interaction from general observation and description to precise, actionable insight.

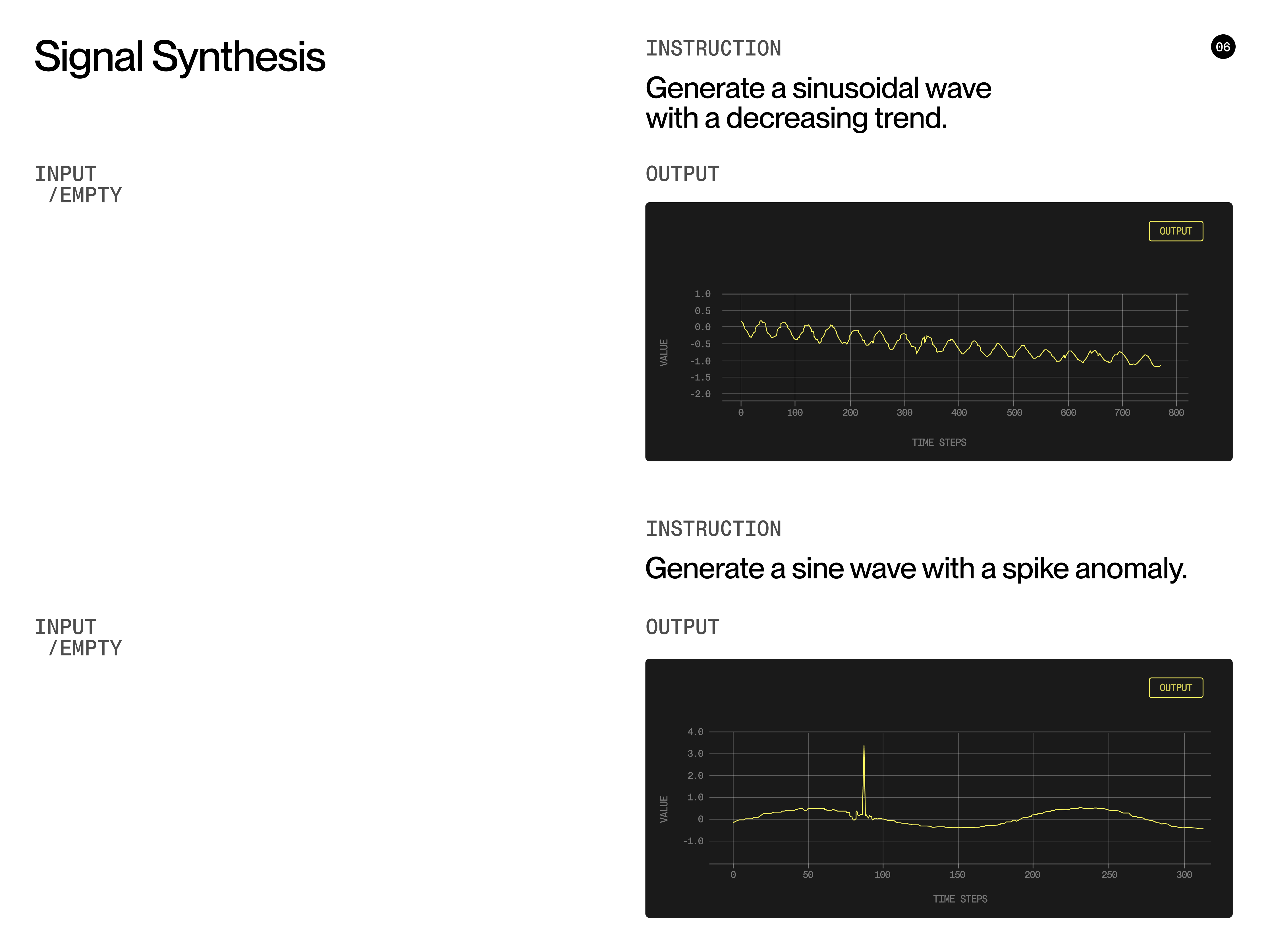

Text-to-Signal: Generate Signals With Natural Language

TimeFusion can also generate sensor data directly from a natural language description — a capability unprecedented in time-series models. The model can produce realistic time-series outputs on demand, enabling machine control, system testing, and rich “what-if” simulations, all from natural language.

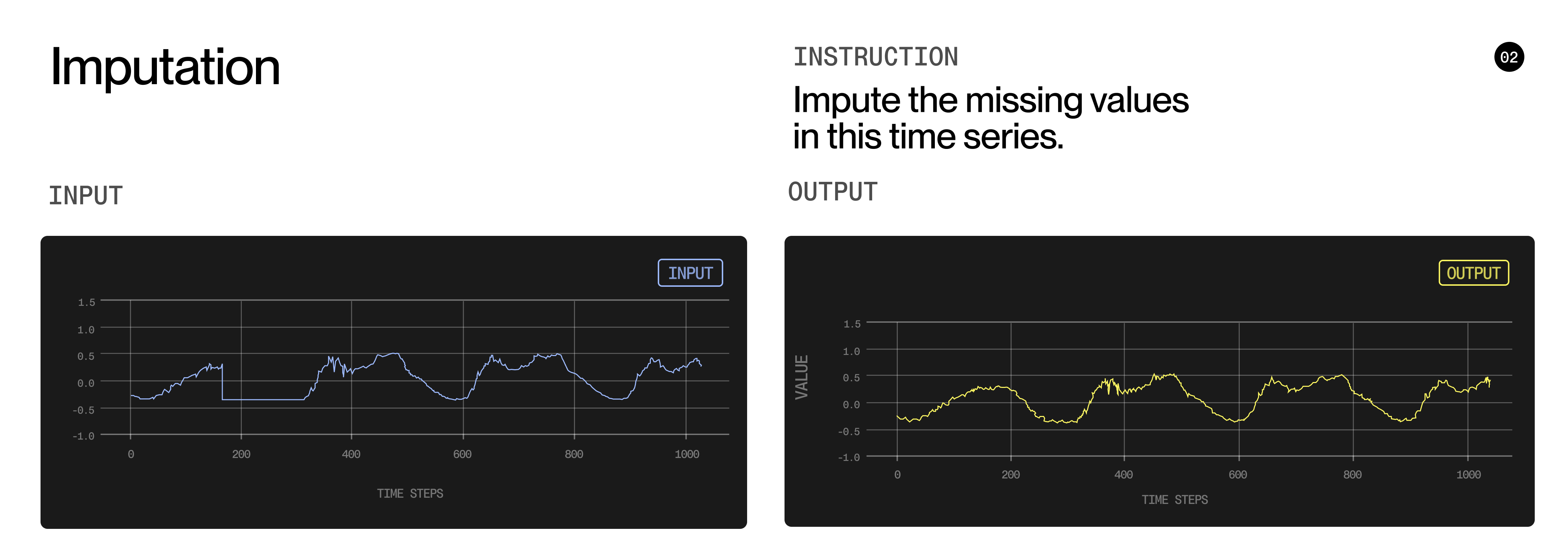

The model can also generate new signals by combining example inputs with a text prompt. For example, TimeFusion can detect missing segments in a signal and realistically fill the gaps — a common problem in industrial automation that today requires manual cleaning and interpolation. TimeFusion can automate this process with a single instruction.

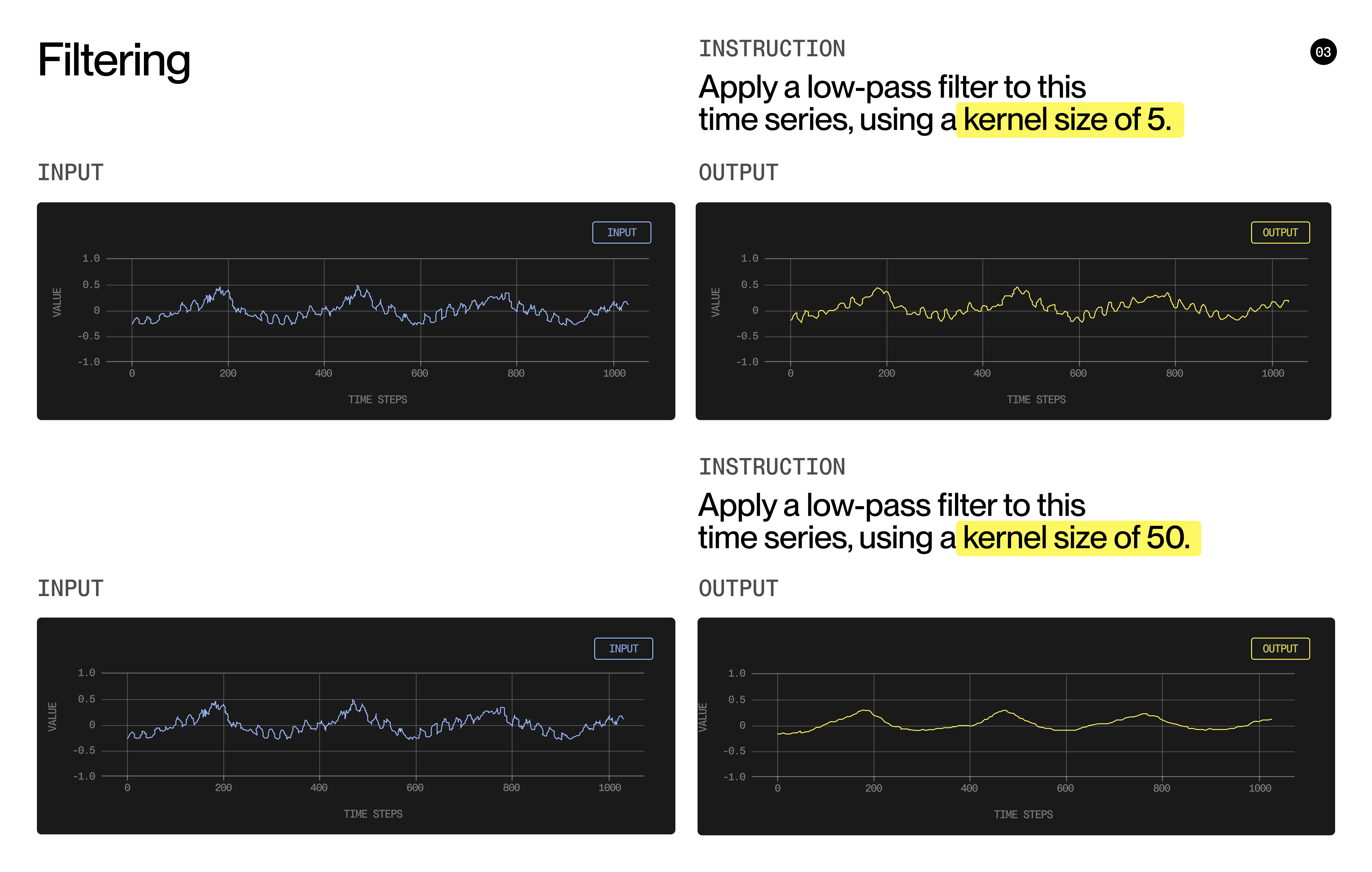

In the example below, the model filters the signal using a simple natural-language prompt, generating a smoother output. This allows an operator to handle noise and anomalous behavior in real time. It also unlocks powerful simulation, augmentation, and “what-if” analysis capabilities — without relying on domain-specific models.

Building TimeFusion with Universal Tokens

Classic LLMs — such as GPT-5 or Sonnet — do not understand time-series signals natively. They approximate sensor understanding by mapping signals into textual tokens, a non-native representation for physical processes, or the time-series signals have to be converted to images and input as plots. This conversion destroys essential structure and relationships encoded in raw sensor data, making true understanding impossible.

To genuinely understand sensors — and explain them to humans — we need a model that speaks both the native language of physical signals and the native language of people at the same time.

This is exactly what TimeFusion achieves. Its core innovation is the concept of Universal Tokens, a unified vocabulary shared between time-series data and natural language. Universal Tokens create a shared intermediate representation — a “lingua franca” between human language and physical signals.

A 9.5M-parameter 1D Transformer–based autoencoder compresses raw time-series data into 32,768 discrete tokens using Finite Scalar Quantization (FSQ). Each token captures a rich, semantically meaningful pattern of sensor behavior — much like how text tokens capture subword meaning in language models.

These time-series tokens are then combined with standard SentencePiece Byte-Pair Encoding text tokens to form the Universal Token vocabulary: a single joint vocabulary shared by both sensors and language. TimeFusion operates directly over this hybrid sequence, allowing the model to naturally attend to relationships between words and sensor patterns. With Universal Tokens, the model can describe, interpret, transform, or generate any sensor signal — combining the structure of time-series data with the expressive power of natural language.

TimeFusion itself is a 2-billion-parameter decoder-only transformer, trained end-to-end on 23 billion multimodal tokens across three major stages:

- Pre-training: 20B tokens of mixed sensor and text data

- Instruction-tuning: synthetic multimodal tasks spanning signal-to-text, text-to-signal, and signal-to-signal

- Post-training: Reinforcement Learning from AI Feedback (RLAIF) using thousands of preference pairs to refine reasoning quality and alignment

By using a unified next-token prediction objective across all modalities, TimeFusion treats every task — forecasting, captioning, anomaly detection, imputation, synthesis — as a single sequence-generation problem. This gives it a level of flexibility and generalization that traditional task-specific time-series models cannot achieve.

How TimeFusion Compares to State-of-the-Art LLMs

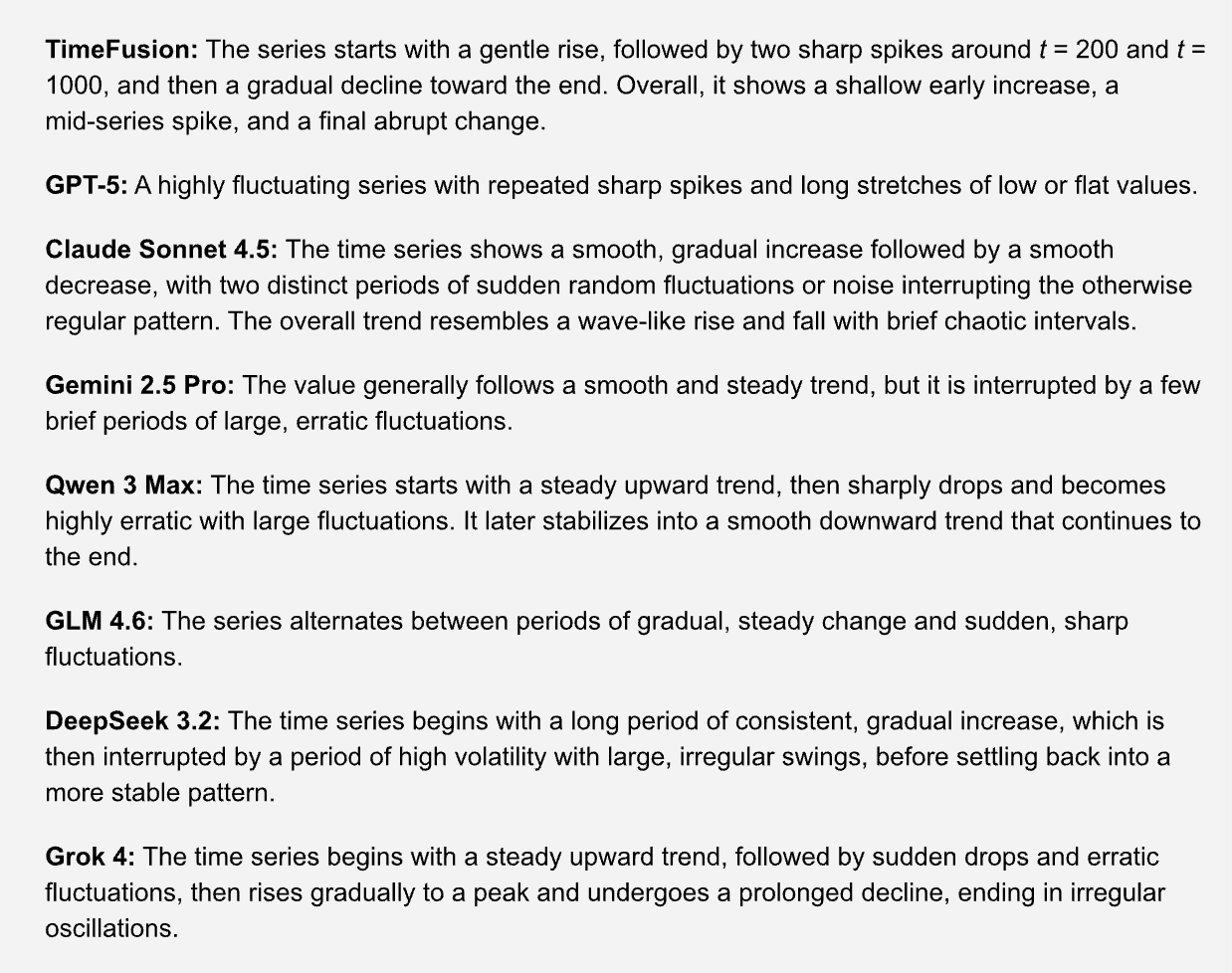

Although TimeFusion is a relatively small 2B-parameter model, it outperforms much larger models like GPT-5 and Claude Sonnet on core sensor–language tasks.

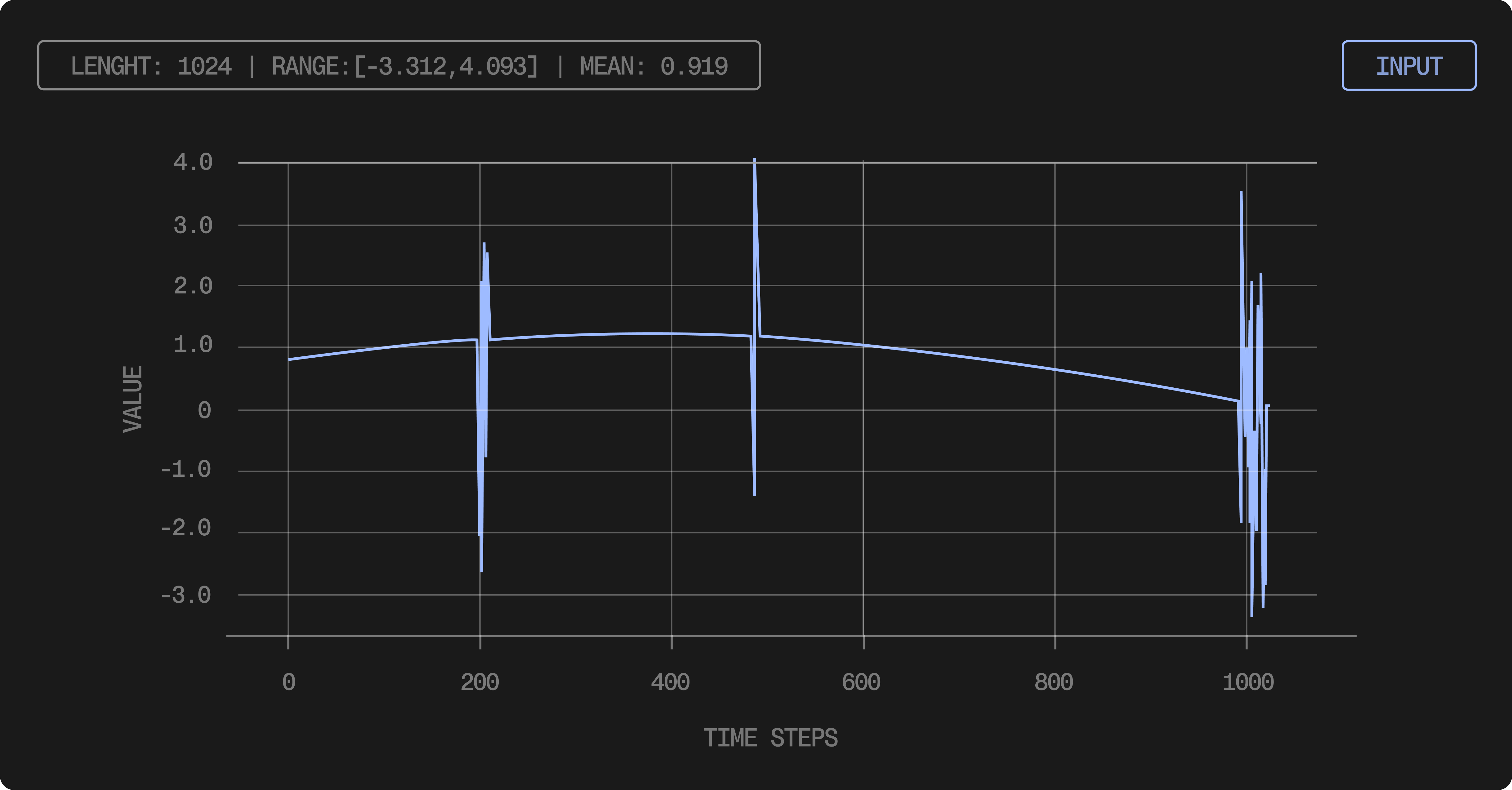

In the examples below, we asked TimeFusion and seven SOTA models to caption the same signal. GPT-5, Gemini 2.5 Pro, and GLM 4.6 missed the main trend entirely, while others noted irregularities but lacked precision. TimeFusion was the only model to correctly identify the anomalies at t = 200 and t = 1000, as well as the mid-series spike.

The difference is profound. In multiple examples, GPT-5, Sonnet, Gemini, GLM, and DeepSeek either missed key anomalies or misidentified their positions. TimeFusion consistently got them right — because general LLMs treat signals as text, while TimeFusion understands them natively.

The Road Ahead: Toward True Physical AI

TimeFusion is the first step toward a broader vision of Physical AI that fuses the native behavior of physical systems with human-level understanding. As the model gains more context and multimodal awareness, entirely new capabilities emerge. Imagine asking:

“Analyze this heart-rate signal in the context of the patient’s activity, medical history, and stress level. Summarize the risks.”

Or running rich operational “what-if” scenarios:

“If energy demand increases by 10% and temperature drops 5 degrees, what load will this substation experience?”

Here, Newton TimeFusion becomes a true Physical AI layer — able to reason about, predict, and simulate real-world behavior. Because Universal Tokens naturally extend to other modalities, this approach can grow to include images, audio, radar, logs, and more.

By unifying natural language with sensor data, TimeFusion creates an intelligence layer for the physical world — one where anyone can ask what machines are sensing and receive clear, actionable answers. This enables real-time collaboration between humans and intelligent systems across healthcare, manufacturing, smart cities, mobility, energy, and beyond.

The result? Billions of silent sensors and simple devices become active, adaptive participants in our environments, ushering in the era of true Physical AI.

.jpg)