1.2

1.11

PORTUGAL

Electricity consumption

1.3

1.19

melbourne

Electricity consumption

2.7

1.3

TURKEY

Power consumption

3.0

2.76

ELECTRICAL TRANSFORMER

Oil temperature

3.0

2.76

germany

Meteorological quantities

LEARN MORE

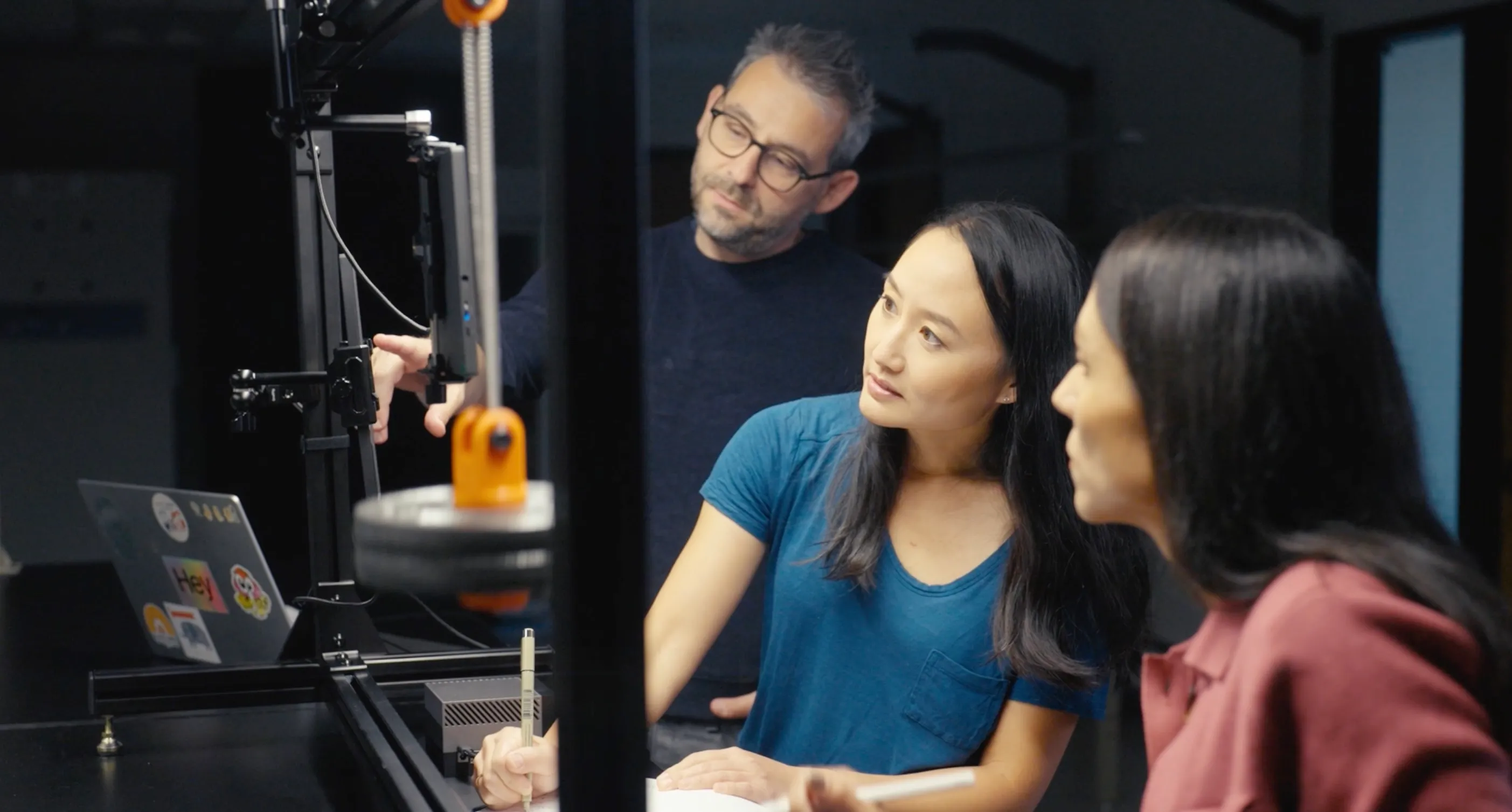

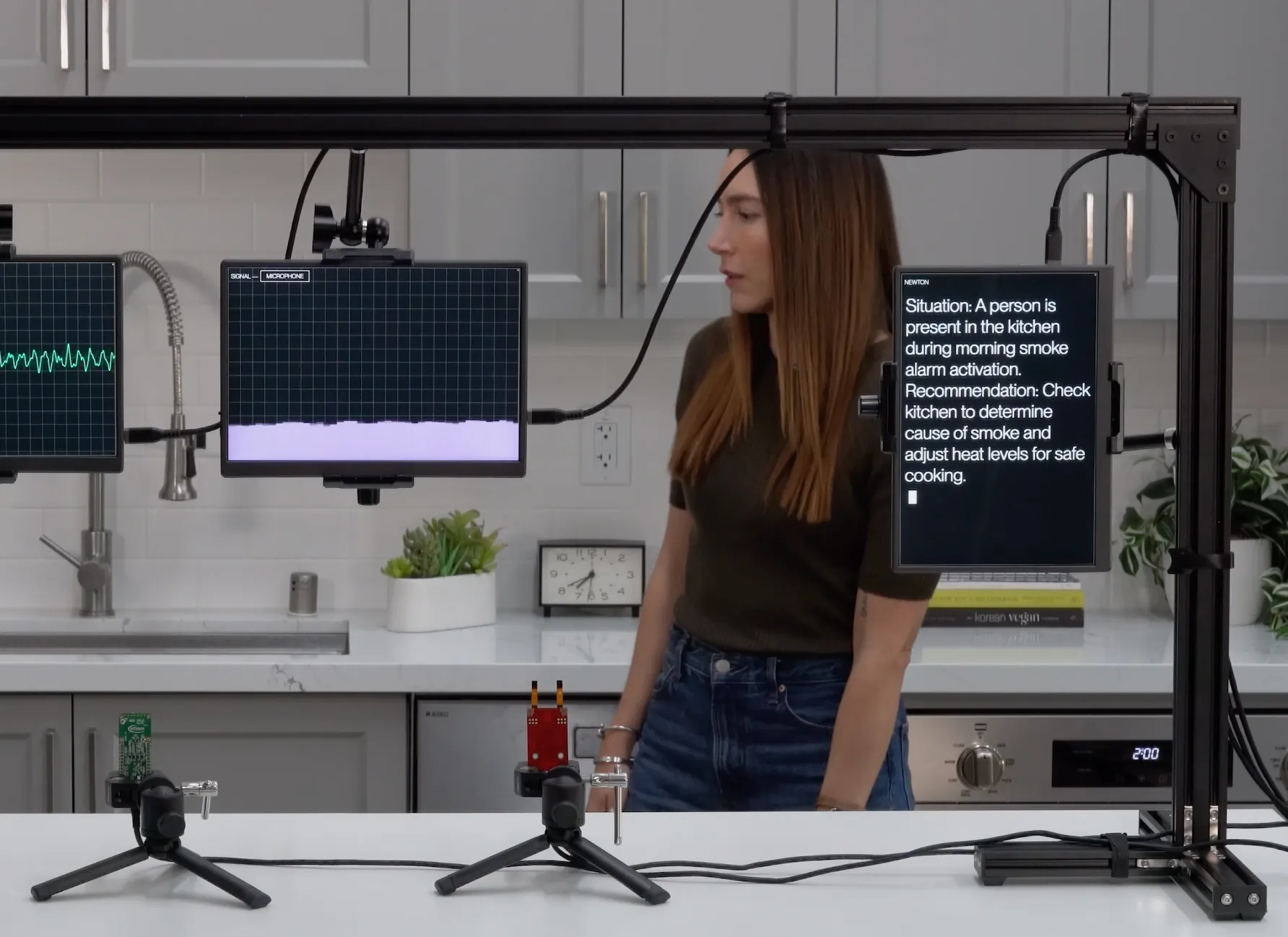

Newton, our model that powers Physical Agents, fuses diverse physical data — such as current, temperature, or vibration — with semantically rich inputs like language and video. By combining these signals, Newton reasons across modalities and uncovers patterns of physical behavior that are difficult or impossible to detect from any single source.

our model

LLMs vs. Real World

The data that keeps physical industries running — the signals and measurements that reflect how the real world actually works — is fundamentally different from the data and tasks of the online world. It requires a model designed and trained from the ground up for these challenges. That model is Newton.

Trained on 80% of text, 10% image and <5% video

4 modalities: text, video, images and audio.

Each modality treated individually.

50% physical sensor data, 20% video, <5% text

Hundreds of sensor modalities can be critical for every use case.

Requires fusion of multiple sensor modalities and data streams.

Newton

One Model.

Endless Real-World Applications.

01

Physical Agents for every use case

02

Pretrained Foundation Model programed with natural language

03

Connect 100s of sensor types

Newton projects physical sensor data and context into a single embedding space, creating a real-time physical world representation that customers can interact with using natural language.

Physics World Model

Semantic World Model

how it works

Machine behavior:

//

motion

//

power

//

electrical

//

etc

Time series sensors

Human behavior:

//

presence

//

activity

//

intent

Video

Context:

//

scene description

//

customer prompts

TEXT

_01

Can take and analyse many kinds of physical sensors, without needing to retrain the model.

_02

Allows interaction with physical data in natural language, like talking with a human.

Machine behavior:

trajectory prediction

multivariate analysis

anomaly detection

classification

Human behavior:

//

presence

//

activity

//

intent

BenchmarkS

Newton is built to process physical sensor data and solve real-world problems — understanding spatial and temporal behavior patterns in complex physical systems. As such, Newton outperforms both general-purpose and narrow-purpose models on tasks grounded in the physical world. Learn more: A recent paper by the Archetype AI team, A Phenomenological AI Foundation Model for Physical Signals.

Mean square error [e^-2]

zero shot

target trained

1.2

1.11

PORTUGAL

Electricity consumption

1.3

1.19

melbourne

Electricity consumption

2.7

1.3

TURKEY

Power consumption

3.0

2.76

ELECTRICAL TRANSFORMER

Oil temperature

3.0

2.76

germany

Meteorological quantities

3.0

2.5

2.0

1.5

1.0

0.5

0.0

Mean accuracy

Object Counting — VSI Benchmark Accuracy

prompt: "how many {object} do you see?"

0.00%

claude

Sonnet 4.5

30.64%

Qwen VL

2.5 7B

46.55%

GPT5

MINI

49.29%

gemini

2.5 flash lite

51.45%

newton

7b

100

80

60

40

20

0

Object Counting — VSI Benchmark Accuracy

prompt: "how many {object} do you see?"

0.00%

claude

Sonnet 4.5

30.41%

Qwen VL

2.5 7B

30.93%

GPT5

MINI

31.44%

gemini

2.5 flash lite

34.54%

newton

7b

100

80

60

40

20

0

blog

Oct 17, 2024

We are excited to share a milestone in our journey toward developing a physical AI foundation model. In a recent paper by the Archetype AI team, "A Phenomenological AI Foundation Model for Physical Signals," we demonstrate how an AI foundation model can effectively encode and predict physical behaviors and processes it has never encountered before, without being explicitly taught underlying physical principles. Read on to explore our key findings.

Dec 12, 2024

Humans can instantly connect scattered signals—a child on a bike means school drop-off; breaking glass at night signals trouble. Despite billions of sensors, smart devices haven’t matched this basic human skill. Archetype AI’s Newton combines simple sensor data with contextual awareness to understand events in the physical world, just like humans do. Learn how it’s transforming electronics, manufacturing, and automotive experiences.

Aug 26, 2024

In a world where devices constantly collect and transmit data, many organizations struggle to harness its potential. At Archetype AI, we believe AI is the key to transforming this raw data into actionable insights. Join us as we explore how the convergence of sensors and AI can help us better understand the world around us.