Modern AI systems excel at language, while the physical world is expressed through sensor signals and time-series data. Bridging these two domains has traditionally required complex tooling and deep domain expertise. Although sensor data captures physical behavior with great precision, it remains difficult to interpret and act on at scale.

We present a parameter-efficient approach for aligning physical world encoders with large language models, enabling grounded natural-language reasoning over physical signals. The result is a simpler, more intuitive way for humans to understand and interact with complex physical systems.

Language models are remarkably good at reading and writing.

But the real world does not speak in sentences.

Instead, it speaks through sensor signals: vibration, motion, pressure, electric current, magnetic fields, and many others. These signals typically arrive as time-series data—streams of numbers changing over time that measure how physical systems behave. A car driving over a road, a motor spinning under load, machines operating on a factory floor, or fluctuations across an electrical grid all generate such data.

This sensor data is rich and precise. It tells us what actually happened in the physical world. But data alone is not insight. Data has value only once it is interpreted, when raw measurements are turned into understanding, recommendations, and ultimately actions. Knowing that a signal changed is not the same as knowing why it changed or what should be done next.

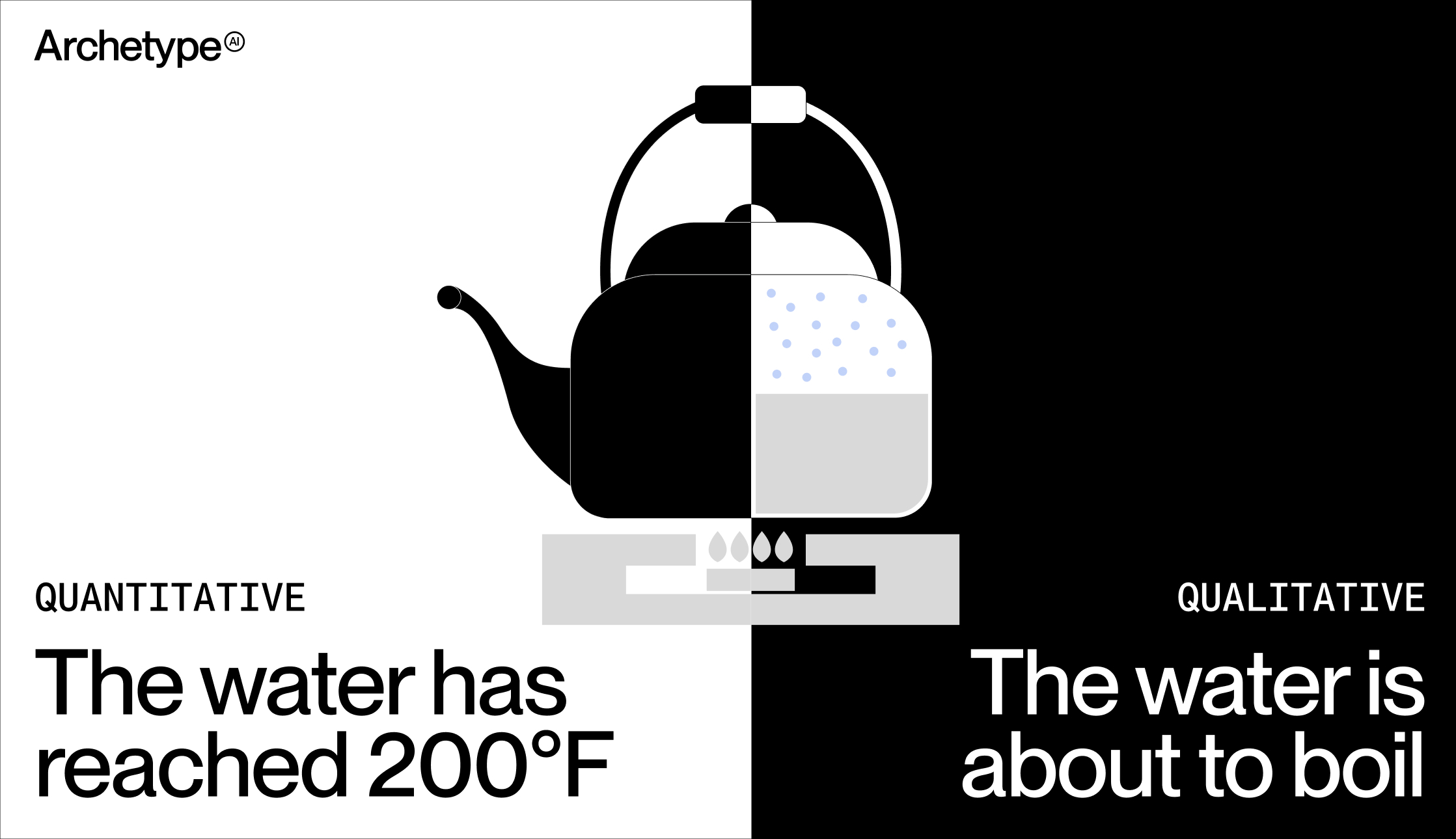

Quantities and meanings

Human understanding of the physical world always involves a duality.

On one side are objective measurements: the temperature of water in a kettle, the vibration amplitude of a machine, the concentration of carbon dioxide in a room. These measurements describe reality with precision.

On the other side are qualitative interpretations, which depend on context: the water is about to boil, the machine is behaving abnormally, the room needs ventilation. These interpretations guide decisions and actions—turn off the stove, schedule maintenance, open a window.

Both levels are essential. Numbers tell us what is happening; meaning tells us what it implies.

Humans constantly translate between these two worlds—the world of atoms and the world of words. But this translation becomes difficult when signals are unfamiliar or require specialized expertise. Few people know what a specific CO₂ concentration implies or how to interpret subtle changes in vibration spectra.

At the same time, the volume of data produced by the physical world is growing rapidly. Processing and interpreting sensor data at scale is becoming increasingly challenging, even for experts.

Why current AI methods fall short on physical signals

Applying existing AI techniques to sensor data is challenging for several reasons. High-quality labeled datasets for physical measurements are scarce, and when such data do exist, assigning meaning to them often requires deep domain expertise. That expertise is expensive, difficult to scale, and in some domains simply rare. In many cases, there are only a handful of people capable of interpreting the signals at all.

Moreover, the meaning of sensor data is highly context-dependent. A rise in temperature may indicate boiling water, an overheating engine, or entirely normal operation in a different system. The same numerical pattern can imply very different realities depending on where it occurs and why.

This raises a natural question: could the problem be addressed by combining physical world models, designed specifically to understand sensor data, with large language models, which encode rich qualitative and relational knowledge about the world?

At first glance, this seems impractical. Retraining large language models to interpret time-series sensor data would require enormous datasets and sustained involvement from scarce experts.

But there is another way.

Aligning physical world models with language models

Our recent research paper explores a simple idea: what if, instead of retraining large models, we aligned them with physical world models?

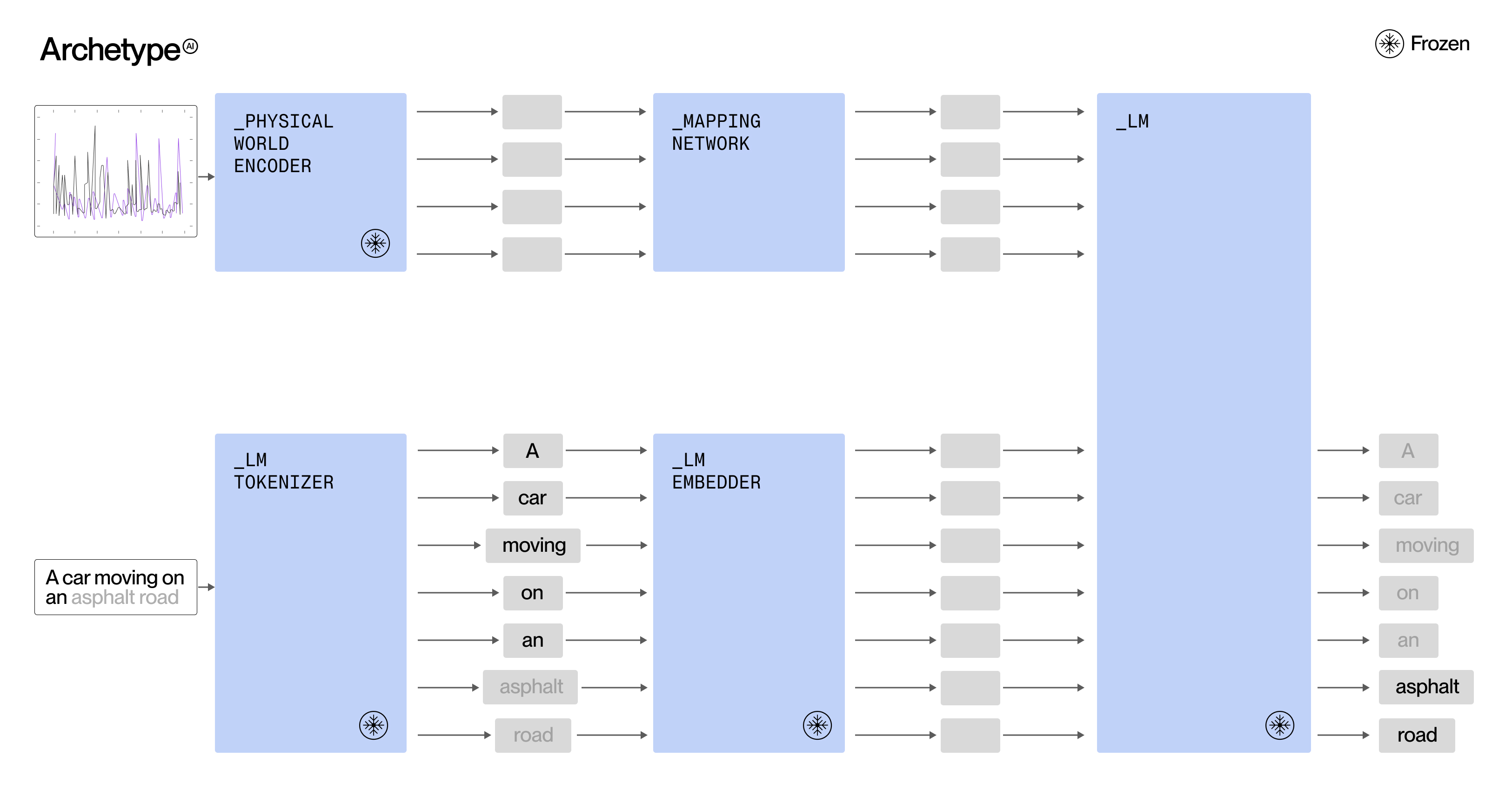

Rather than forcing a language model to learn raw sensor signals directly, we connect two models that already excel at their respective tasks:

- A strong, pretrained physical world encoder that converts raw sensor signals into compact embeddings, capturing physical behavior in a structured and meaningful way

- A strong, pretrained language model that can reason about the world and communicate fluently in natural language

The two are connected using a small learned alignment network that maps between their internal representations.

In our experiments, we pair a foundational physical world encoder—the Newton Omega encoder—with a frozen large language model, Llama-2-7B-Chat. Only the alignment network, consisting of roughly 3.3 million parameters, is trained. Both large models remain unchanged.

Training this connector takes less than half an hour on a single modern GPU, demonstrating that meaningful integration between physical signals and language can be achieved with minimal additional computation.

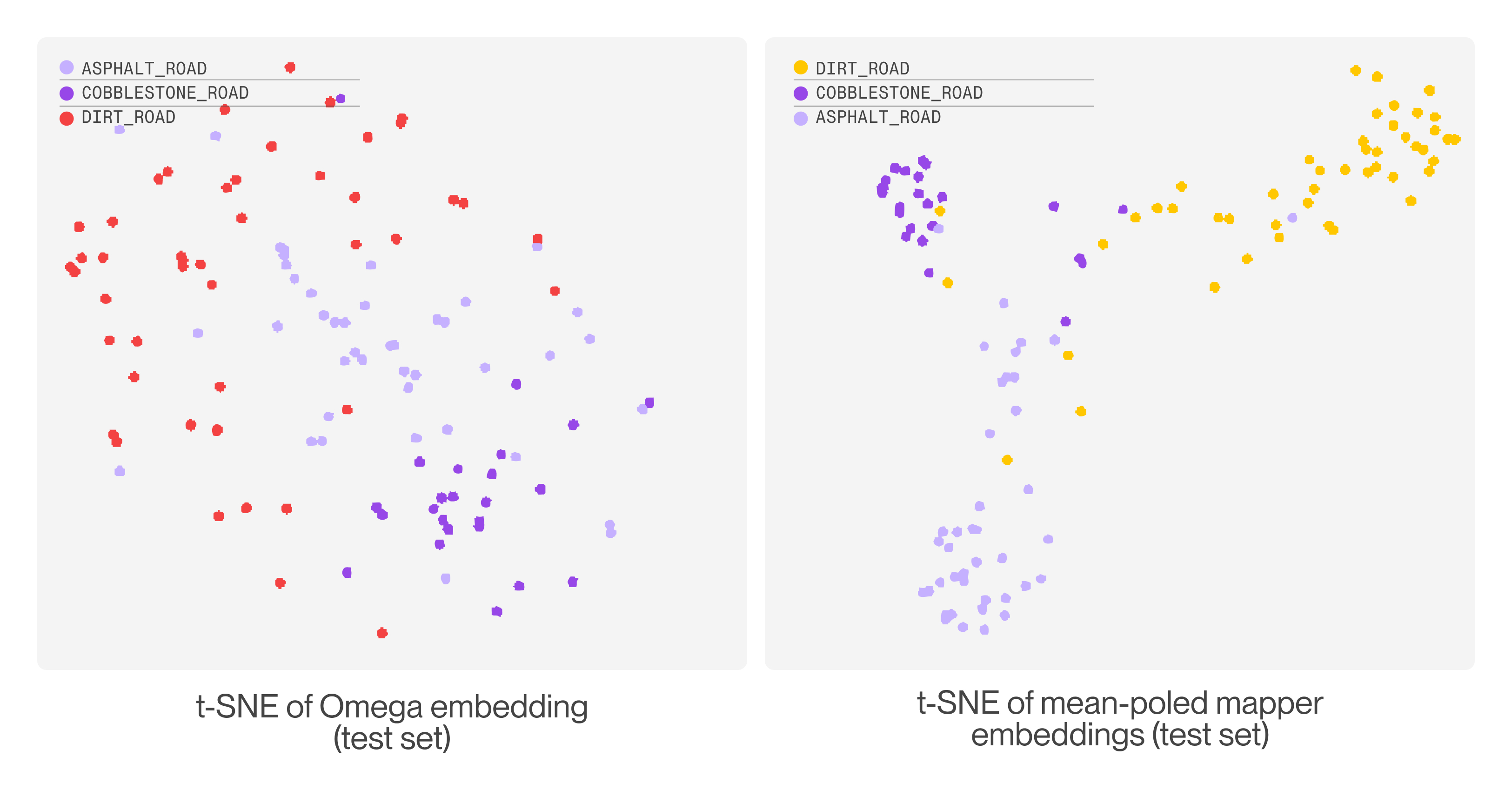

Semantic structure in physical embeddings

A key observation makes this approach possible. When we examined the embeddings produced by the Newton physical world encoder, we found that signals with similar qualitative properties naturally cluster together. For example, automotive sensor signals associated with road surfaces of comparable roughness form tight groups, while smooth and uneven patterns separate cleanly. In this embedding space, physical behavior organizes itself semantically rather than merely numerically.

In other words:

The Newton physical world model does not merely compress sensor data—it organizes physical behaviors into meaningful structure.

Translating sensor meaning into language

Once sensor data lives in such a structured space, aligning it with language becomes far more feasible than expected. Instead of fine-tuning a language model, we train a small mapping network that learns how to translate physical embeddings into the internal representation space of the language model.

This alignment network effectively learns how to "speak" to the language model—turning sensor-derived meaning into continuous tokens that the model can reason over.

If a physical world encoder produces embeddings with semantic structure, a lightweight bridge can connect those embeddings to a language model—enabling natural-language reasoning over physical signals.

We are not rebuilding intelligence. We are aligning existing forms of intelligence.

Talking to sensor data: automotive example

Once aligned, the system does more than output labels or scores. It can answer questions about sensor data in natural language.

In the case of automotive data, instead of navigating dashboards, a user can ask:

"Did anything unusual happen during this drive segment?"

Yes—there is a clear pattern change consistent with a rougher surface beginning midway through the segment.

"Was this closer to smooth asphalt or a rough surface?"

It is closer to a rough surface, with frequent high-variation fluctuations typical of uneven texture.

"Give me a one-line summary I can send to the team."

Road quality briefly degrades due to a localized rough patch, then returns to smoother conditions.

This represents a different interaction model altogether. Rather than analyzing numbers, users ask questions—and receive qualitative, contextual explanations. By adjusting the prompt, the same system can interpret signals from a car, a kettle, or a wind-turbine gearbox.

Why this matters

Sensor analytics underpins modern transportation, manufacturing, infrastructure, and health technologies. Yet access to insight is often limited by access to expertise and tooling.

Most people do not want more charts. They want answers: What happened? Where did it happen? What changed? What should we do next?

Our technique provides many valuable propositions:

- It allows everyday workers access to expert-level sensor insight, without requiring years of specialized training.

- Interaction through natural language is intuitive and expressive: instead of navigating complex interfaces, people can speak directly to their machines and equipment, asking questions, setting context, and receiving meaningful explanations. In many settings, this could replace layers of dashboards, alerts, and custom tooling with a single, conversational interface.

- The approach is lightweight and practical. Because only a small alignment network is trained, systems can be updated frequently, adapted to new environments, and even retrained at the edge. Over time, they can evolve through additional feedback from real users, steadily improving how physical behavior is interpreted and communicated.

Taken together, this work points toward a new interface for physical AI—one in which humans no longer have to adapt to sensor data, but sensor data adapts to how humans think, communicate, and act.

We are continuing to build on this foundation and will share further results as the approach matures.

Full methodology and experiments can be found in the recently published paper, Roadsense-LM: Aligning Time-Series Sensors with Frozen Language Models, by Hasan Doğan, Jaime Lien, Laura I. Galindez Olascoaga, and Muhammed Selman Artıran.

.jpg)