Operational excellence in manufacturing is dependent on maintaining safe working conditions, ensuring quality processes, and preventing costly equipment failures. Yet despite massive investments in monitoring systems, 70-90% of industrial sensor data goes to waste. Traditional approaches require building bespoke machine learning models for every use case and sensor type, a process that can take up over 12 months per model and 5 (or more) ML engineers for each application.

At Archetype AI, we're taking a fundamentally different approach. With Newton AI, our horizontal platform powered by a foundation model trained on real-world sensor data, organizations can access tools for rapidly building and deploying custom AI applications through a simple API and no-code interface. Let's explore how Newton AI is transforming the manufacturing landscape and creating new opportunities.

Solving the Sensor Fusion Challenge with AI

Manufacturing facilities run on sensor data. Millions of sensors operate across factory floors, capturing everything from vibration and temperature readings to equipment performance and worker motion patterns. Interpreting one sensor signal in isolation is straightforward, but fusing data from multiple sensors distributed into a single, actionable interpretation remains a challenge.

Humans have a unique ability to connect disparate sensory cues and contextual information to create a narrative or make quick, intuitive decisions. Automated sensing systems struggle with this, and the challenge becomes exponential as the number of sensors, locations, and event sequences increases. Even for very simple systems, the number of potential interpretations quickly becomes overwhelming. For example, a system with just two binary sensors would generate 1,536 possible scenarios, and adding just one more binary sensor increases that number to 24,576!

.webp)

This happens because most real-world processes depend on both current observations and past events. Identical sensor readings can mean completely different things based on what happened before, making it virtually impossible to manually program robust systems that cover all scenarios.

Newton is a Physical AI foundation model and platform that accelerates building and deploying sensor-based intelligence. Newton provides out-of-the-box sensor fusion for 100% of manufacturing data and the ability to program a solution with natural language in minutes, instead of years. Newton can support millions of use cases by fusing simple sensor data with contextual awareness.

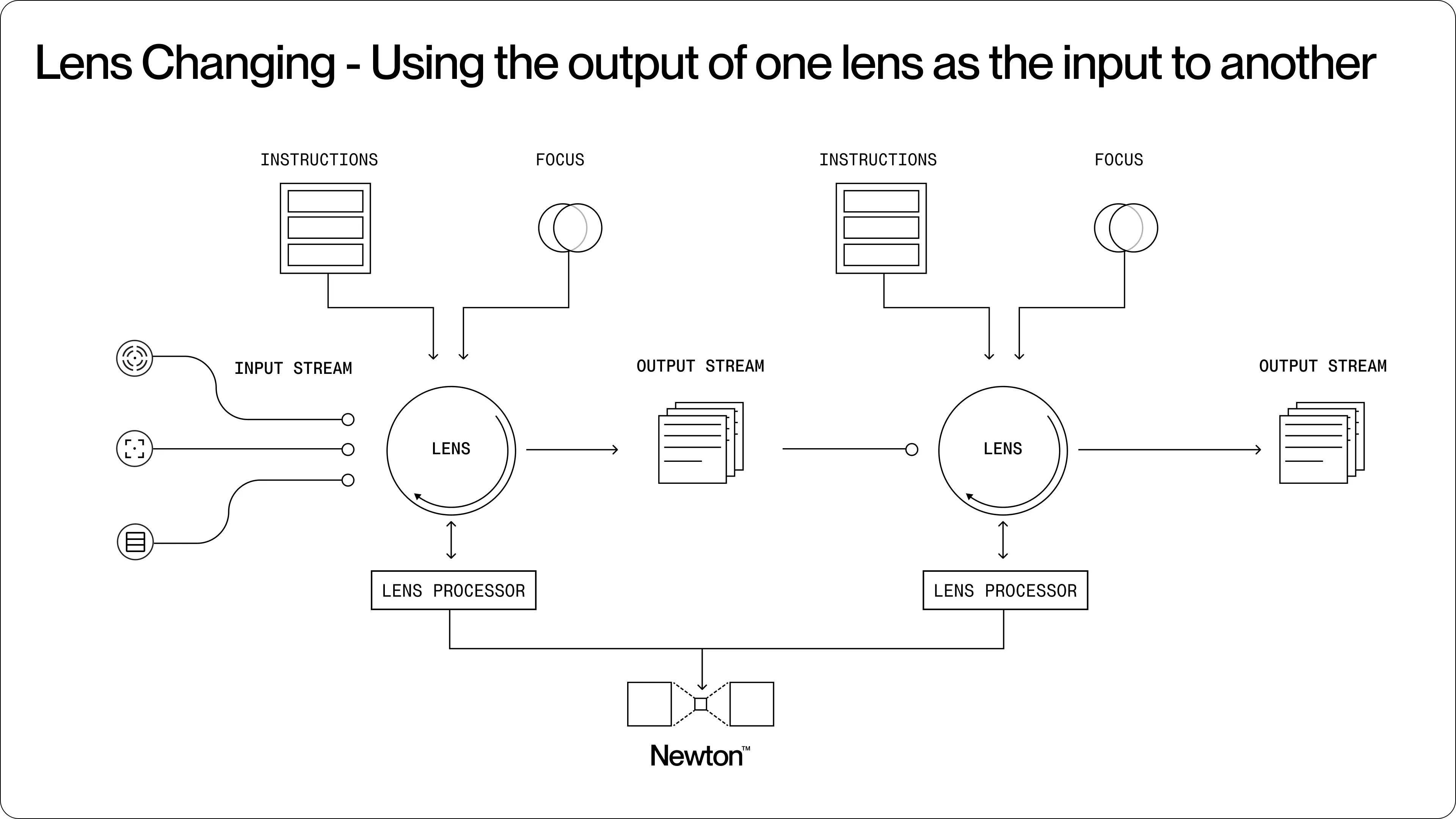

Manufacturers can build multiple Lenses powered by Newton and connect them into a larger workflow. When combined with additional contextual data — such as location, time, shift schedules, production targets, or safety protocols — the Newton model can provide relevant recommendations for different scenarios, no additional model training required.

Newton AI & Contextual Understanding for Manufacturing

Let's look at how Newton's capabilities translate into practical manufacturing use cases, focusing on three key areas: worker safety monitoring, workplan validation, and predictive maintenance through anomaly detection.

Worker Safety Monitoring

Manufacturing safety goes beyond simple motion detection and cameras. Newton can combine data from various sensors — cameras for human activity understanding, microphones for audio cues, equipment control streams, contextual information — to create a comprehensive understanding of a situation and identify potential safety hazards in real time.

The warehouse safety demo above shows a Worker Safety Lens powered by Newton. Using video processed at the edge, the Lens analyzes a warehouse environment to predict potential safety risks, detect when workers are exposed to them, and trigger alerts and alarms to intervene. The Lens generates yellow and green overlays on top of the video feed, identifying worker and machine operating areas. The overlap is where the danger is.

Here's how the Worker Safety Lens works:

- Continuous monitoring: By analyzing the camera stream, Newton can make sense of the scene, predict safety risks, and understand when workers are exposed to danger.

- Contextual awareness: When Newton sees that workers are doing expected acitivities in their usual areas, it recognizes this as standard operation and maintains baseline monitoring.

- Hazard identification: If a worker enters a transit area, however, Newton can immediately detect this as a safety hazard and trigger appropriate alerts to supervisors and nearby workers.

- Adaptive responses: When live safety risks are detected, the Lens can automatically trigger safety protocols like shutting down equipment, activating warning lights, or sending alerts to safety personnel.

Unlike traditional safety systems that rely on rigid rules and often generate false alarms, the Newton foundation model enables developers to create applications that can adapt to the context of manufacturing processes, significantly improving the accuracy of safety responses.

Workplan Validation

Manufacturing efficiency depends on ensuring that production processes follow established workflows and quality standards. Developers can build Lenses powered by Newton AI that enable real-time verification that workplans are followed.

Here is how our foundation model, Newton, can monitor multiple data streams at the same time and confirm that work is being performed according to specifications:

- Process adherence: By analyzing video feeds and equipment sensor data, Newton can verify that assembly or production steps are being followed and done in the correct order.

- Quality assurance: Monitoring the available sensor streams, Newton automatically logs compliance or flags deviations.

- Automated documentation: Newton can automatically generate compliance reports and process verification logs based on sensor observations.

This creates a productive manufacturing environment — when the number of tedious manual tasks for operators is reduced, they can focus on more strategic tasks that drive business impact.

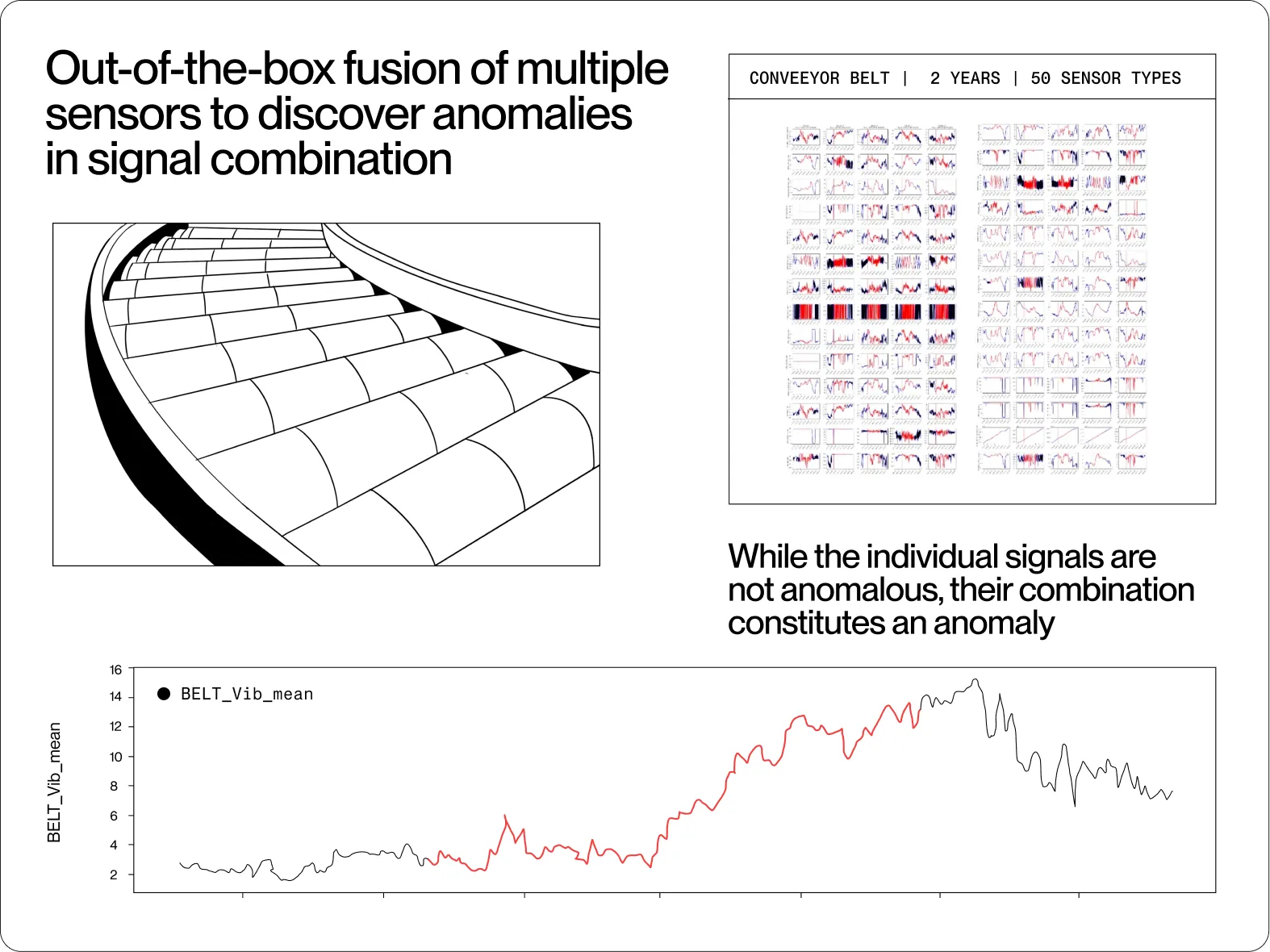

Predictive Maintenance Through Anomaly Detection

Manufacturing equipment failures result in costly downtime, safety hazards, and production delays. Newton's anomaly detection capabilities enable predictive maintenance by identifying unusual patterns before they become critical failures.

The image above shows how a Lens fuses multiple sensors together to discover anomalies in signal combinations. For example, in a conveyor belt system spanning the length of a packaging facility, Newton could be used to fuse and analyze temperature sensors, belt speed measurements, gearbox vibration data, and camera footage of the belt’s surface condition to identify multivariate anomalies. While individual signals may appear okay — temperature elevated but within acceptable ranges, belt speed consistent, vibration high but not abnormal — their combined pattern together with the camera feed showing surface wear could reveal early signs of an impending belt failure within the next couple of days.

Here's how predictive maintenance works with Newton:

- Monitoring: By continuously observing vibration sensors, temperature readings, and other equipment parameters, Newton learns what constitutes normal operation for each piece of machinery.

- Pattern recognition: Various parameters like rotation speed, vibration, and temperature can appear normal, but when analyzed together, an AI system reveals patterns that can signal potential issues.

- Contextual understanding: Newton can take into account production schedules, environmental conditions, and equipment age to differentiate between normal variations and anomalies.

- Alerts: Rather than waiting for a catastrophic failure, Newton can provide early warnings that allow maintenance teams to minimize disruption to production.

This approach reduces unexpected downtime and optimizes maintenance costs by focusing attention where it's needed most, when it's needed most.

The Future of AI for Manufacturing

The manufacturing industry has long struggled with fragmented systems and isolated data sources. Newton offers a path forward with its unified multimodal approach. The model enables faster deployment of intelligent systems by reducing the complexity of working with diverse data sources.

Moreover, our approach also unlocks value from pre-existing sensor infrastructure. Most manufacturing facilities already have extensive sensor networks with powerful data collection capabilities. By leveraging this existing infrastructure, Newton can enhance the value of industrial sensors that have already been deployed.

Newton also supports creating workflows that include several Lenses, where the output of one lens becomes the input to another, enabling sophisticated analysis. Multiple Lenses can be combined into workflows that create truly intelligent manufacturing operations — for example, by combining Safety, Workplan Validation, and Predictive Maintenance Lenses, manufacturers can use Newton as a comprehensive manufacturing intelligence platform that monitors, analyzes, and optimizes operations for the whole organization.

For manufacturers, Newton offers the opportunity to overcome high costs and barriers to using advanced manufacturing intelligence:

- Accelerated deployment: Accelerate time-to-value by using a Physical AI foundation model that understands industrial sensor data and can be rapidly configured for specific needs.

- Comprehensive sensor fusion: Create systems that understand operational context beyond isolated sensor alerts, enabling more sophisticated and reliable manufacturing intelligence that can make use of 100% of the available data.

- Cross-system integration: Build solutions that can make use of data from existing manufacturing systems rather than requiring complete infrastructure replacement. New capabilities can work together with legacy systems.

- Continuous improvement: Benefit from Newton's learning capabilities to improve and adapt manufacturing intelligence over time as conditions and requirements evolve.

The trillion sensor economy represents a massive opportunity, and Newton provides the platform to unlock that value across manufacturing operations of all scales and types. From individual machines to entire factory floors, Newton's flexible approach to sensor data enables manufacturers to achieve new levels of safety, efficiency, and operational excellence.